Came to second this. I have an old hp Chromebook that is indestructible, has insane battery life, and still has a few years of updates left. The built in Linux terminal is fine and just about anything you can get through apt-get, dpkg, or otherwise works fine as well (if there is an arm version), it’ll even add menu entries for GUI apps.

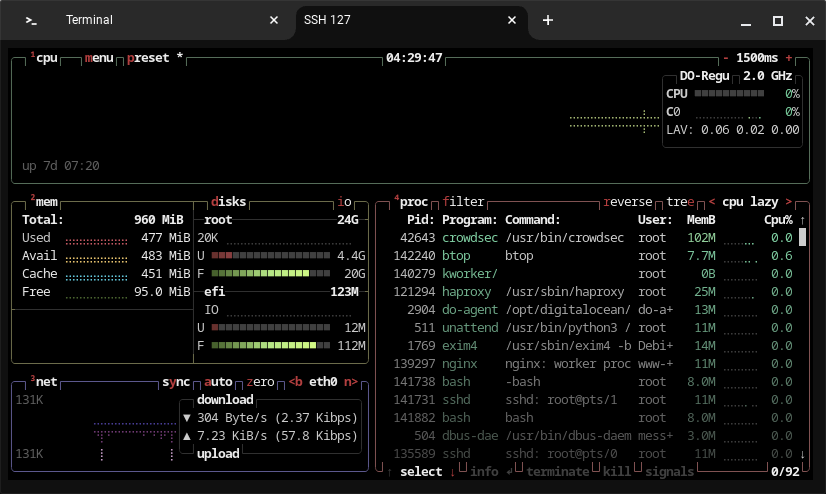

I do light reading or dev work on it, and use the built in terminal to keep track of and ssh into my remote boxes. I take it on the road to take notes or hop on a wifi.

When I first got it the interface was kinda crap for a laptop, but through the updates (dark mode, new menu, etc) it’s actually just fine now.

It’s slow, low ram and only usable for a few tabs at a time, but for what I use it for it does fine, and it was cheap enough I won’t cry if it dies.

Mine is a 2020 with 32gb storage and 3gb ram but same ballpark. I just replaced my PC earlier this year but the Chromebook is next. I’m looking at renewed HP elitebooks or renewed ThinkPads, but I’m not sure either come in a size OP would want.